Will Todd Howard’s Elder Scrolls 6 Be a Victim? AAA Games Like Cyberpunk 2077 Have Created an Arms Race That Gatekeeps Average Gamers From Ever Playing Them

The gaming industry’s obsession with pushing graphical boundaries has reached a fever pitch. As AAA studios continue their relentless pursuit of photorealistic visuals, they’re creating an unexpected problem that threatens to leave millions of players behind. The cost? Not just in development budgets, but in the ever-increasing hardware demands that are turning modern gaming into an exclusive club.

While Todd Howard and his team at Bethesda gear up for The Elder Scrolls 6, a concerning trend is emerging in the gaming industry. Recent releases have shown that the push for cutting-edge graphics isn’t just straining development resources—it’s creating a technological barrier that’s becoming increasingly difficult for average players to overcome.

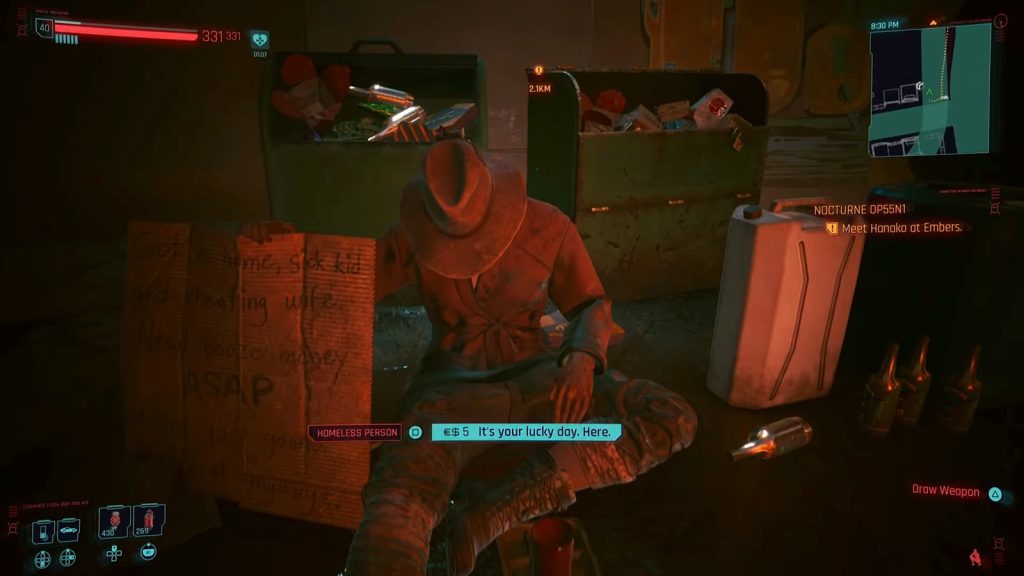

As we’ve seen with games like Cyberpunk 2077, the push for visual excellence comes with a steep price tag, both for developers and players alike. But is this arms race really serving the gaming community, or is it simply feeding an unsustainable cycle of escalating expectations?

The high cost of chasing pixels

Remember when the most demanding thing about gaming was convincing your parents to let you stay up for “just one more level”? Those days are long gone. Now, as noted by The New York Times, studios like Sony and Microsoft have bet billions on the idea that customers wanted realistic graphics, but this gamble might be backfiring spectacularly:

“It’s very clear that high-fidelity visuals are only moving the needle for a vocal class of gamers in their 40s and 50s,” says Jacob Navok, a former Square Enix executive, pointing out that younger players are perfectly content with games like Fortnite, Minecraft, and Roblox.

Recent releases have started showing the cracks in this strategy. Take Indiana Jones and the Great Circle, which has made hardware ray tracing mandatory – effectively locking out PC players with older GPUs. It’s not just about prettier graphics anymore; it’s about whether you can even launch the game.

The situation with Alan Wake 2 paints a similar picture. While it’s technically playable on older hardware, the experience is so compromised that many players might as well not bother. This trend raises an uncomfortable question: are we reaching a point where AAA gaming is becoming an exclusive club for those who can afford the latest hardware?

When more pixels mean fewer players

The irony of this situation isn’t lost on industry veterans. While studios pour hundreds of millions into achieving the perfect reflection in a puddle or the most realistic hair physics, they might be alienating a significant portion of their potential audience.

“Playing is an excuse for hanging out with other people,” observes Joost van Dreunen, a market analyst and NYU professor, highlighting how the industry might be missing what players actually value.

This disconnect is particularly concerning when we look at upcoming titles like The Elder Scrolls 6. With each new console generation and graphics card series pushing the boundaries further, we have to wonder: will Todd Howard‘s next masterpiece be accessible to the average player, or will it become another victim of the industry’s obsession with visual fidelity?

The solution might lie in what some developers are already calling for. As Rami Ismail provocatively asks:

How can we as an industry make shorter games with worse graphics made with people who are paid well to work less?

Perhaps it’s time for the industry to realize that not every game needs to push the technological envelope to be successful. After all, some of the most popular games in recent years have prioritized accessibility and gameplay over cutting-edge graphics.

What do you think about the current state of AAA game development? Are you worried about being left behind by increasingly demanding system requirements? Share your thoughts in the comments below!

This post belongs to FandomWire and first appeared on FandomWire

Join XGamer Discord to discuss the game with other players

Click to join our Discord